My X feed doesn’t stop talking about DeepSeek’s R1. So here are my 5 cents/ pennies.

What is DeepSeek’s R1?

A reasoning LM with 671B parameters out of China. The model has been open-sourced, open-weighted to be precise. Meaning, one can download the weights work with it (+ their paper), but you can't inspect the dev code.TLDR: It’s a masterpiece!

ONE MORE THING: I share my learnings with you, and keep you updated on AI/ how to build best with AI, as new tools/ AI will be launched.

I am launching the updated online course: Everyone can Code!✨ (Updated, because it keeps valuable lessons on working with AI, but has a chapter that I update constantly.)

🚨Special DEAL: Get a yearly Premium Subscription to this newsletter and receive the course Everyone can Code!✨for free!🚨 (Together worth 200 €)

(You will be emailed with the course entry. Current Premium subscribers will get access to the course automatically.)

An entirely new way to present ideas

Gamma’s AI creates beautiful presentations, websites, and more. No design or coding skills required. Try it free today.

(✨ If you don’t want ads like these, Premium is the solution. It is like you are buying me a Starbucks Iced Honey Apple Almondmilk Flat White a month.)

I tested the R1-Lite model already in November, and it was meh

I have compared its reasoning capabilities with o1, Claude Sonnet 3.5, and Grok 2. They were to solve a math riddle, where reasoning was necessary to solve it.

The surprise? Grok 2 (a non-reasoning model) solved it.

On 20th of January then the full release: R1

Contrary to the whole industry’s believe with the full R1 release, DeepSeek also decided to open-source it (open-weight + paper of how to build).

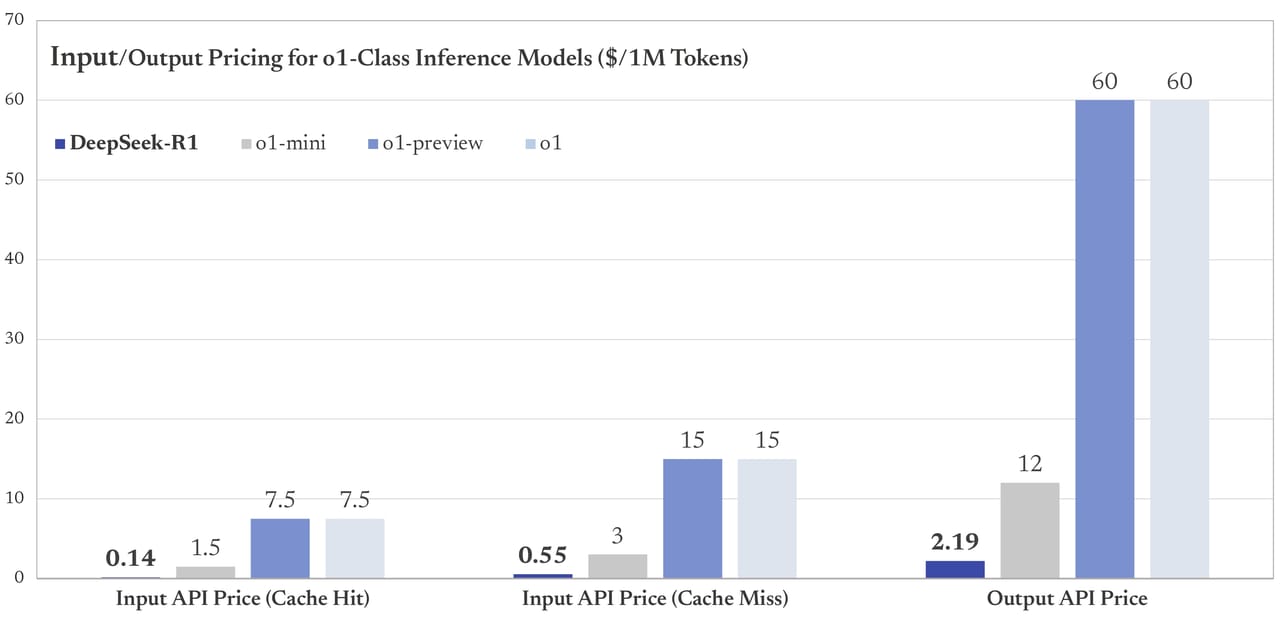

And the kicker? OpenAI spent $600M+ on o1. Google? Billions on Gemini. DeepSeek: $6M total, and is extremely cheap to run.

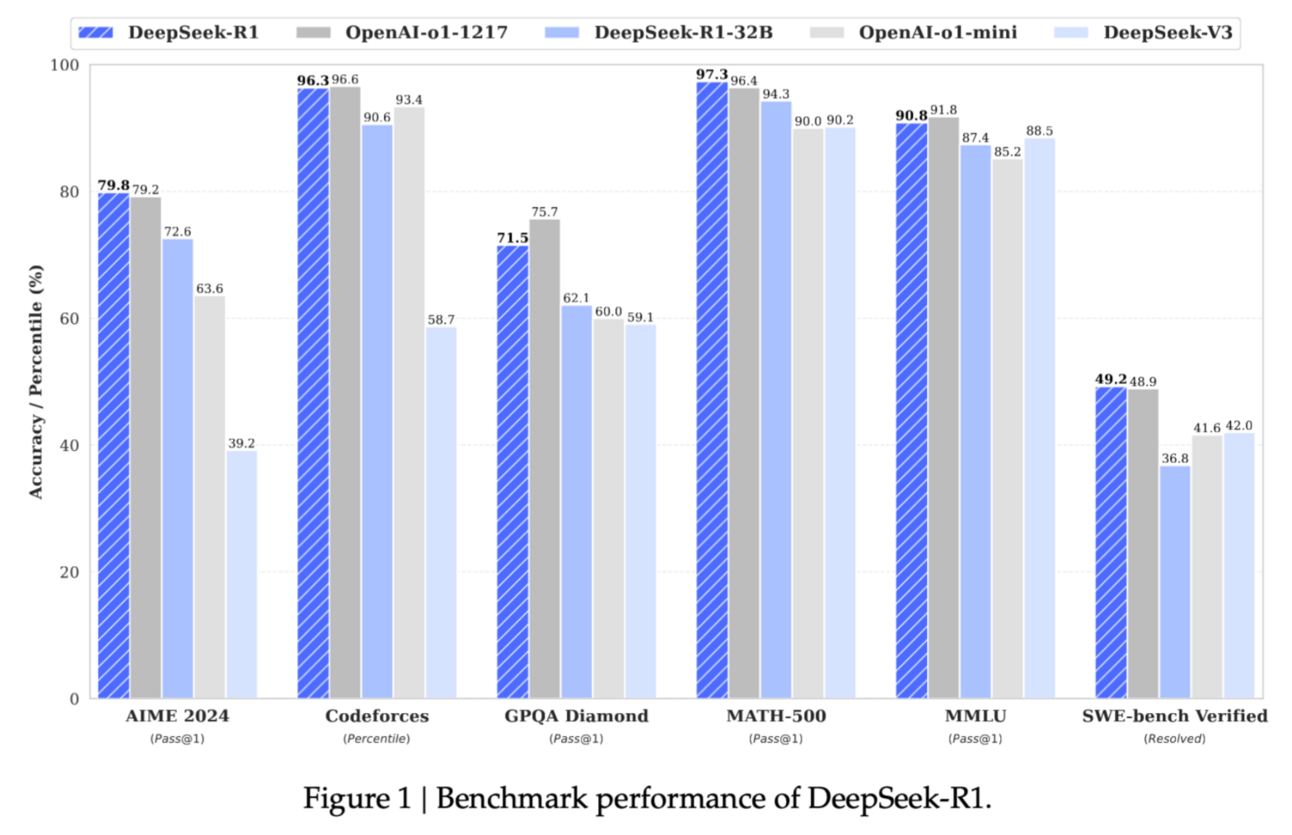

The benchmark results are astounding.

One can believe the actual figures or not, but without a doubt this model is a masterpiece and starts a new AI model era!

Let me unpack:

A: It has a huge focus on Reinforcement Learning (RL). They have introduced DeepSeek-R1-Zero, which has been trained entirely without supervised fine-tuning, showcasing advanced reasoning behaviors but struggling with readability and language mixing.

It becomes more and more clear that the path to AGI is RL. See David Silver’s talk. (David Silver is a leading AI researcher, and the brain behind RL at DeepMind, contributing significantly to AlphaGo and AlphaZero.)

DeepSeek developed R1 with a multi-stage training pipeline incorporating cold-start data and iterative RL.

Cold-Start Enhancement?

Refers to the initial phase of training where a small, high-quality dataset is used to fine-tune the model before further reinforcement learning (RL) stages. This approach helps establish a solid foundation for subsequent learning, addressing issues like poor readability and language mixing by providing structured, coherent data from the start.

This method was crucial in achieving performance levels comparable to OpenAI's o1-1217 in reasoning tasks.

B: It effectively distills reasoning capabilities from larger models. They distilled reasoning capabilities from larger models into R1 using techniques like knowledge distillation, where a smaller model learns to mimic the behavior of a larger, more capable model. This approach allowed R1 to achieve high performance with lower computational overhead.

DeepSeek plans to include improving multi-language capabilities, addressing prompt sensitivity, and optimizing RL for software engineering and broader task generalization.

(Recognize the global focus on software engineering with AI.)

Unlock the full potential of your workday with cutting-edge AI strategies and actionable insights, empowering you to achieve unparalleled excellence in the future of work. Download the free guide today!

(✨ If you don’t want ads like these, Premium is the solution. It is like you are buying me a Starbucks Iced Honey Apple Almondmilk Flat White a month.)

DeepSeek slashes prices in training and inference

Their techniques are effective, no doubt. They are cutting costs by a factor of 45!

In addition, they have between 200 and 300 employees, not thousands.

The US is in panic mode.

NVIDIA stock is down 17%. No multi-billion dollar data centers needed.

I have not seen that the US copies innovations from China, rather vice versa. But that’ll change for sure. The OpenAIs, xAIs, Anthropics, Googles of the West would be ignorant to not adapt some of DeepSeek’s techniques.

For the end user we’ll see Jevon’s paradox in action.

For the end user, this is good. This is what drives technological progress.

One other interesting observation of R1’s release is that it not only “bad” news for the AI industry, but good news for Apple. Apple? Yes, Apple. This post unpacks it. (My short explanation below.)

In short (I try):

R1 is an MoE model (an explanation of it right below). It is a 671B MoE model with 37B active parameters per token. Since the active 37B can’t be predicted, all parameters must remain in high-speed GPU memory.

Apple Silicon uses Unified Memory and UltraFusion for cost-effective, high-capacity memory. Unified Memory shares resources between CPU and GPU, eliminating data copying. UltraFusion connects dies (these are blocks of semiconductor material on which integrated circuits (ICs) are fabricated) with 2.5TB/s bandwidth, enabling models like DeepSeek R1 to run on compact, efficient setups.

The rumored M4 Ultra could offer 256GB memory at 1146GB/s.

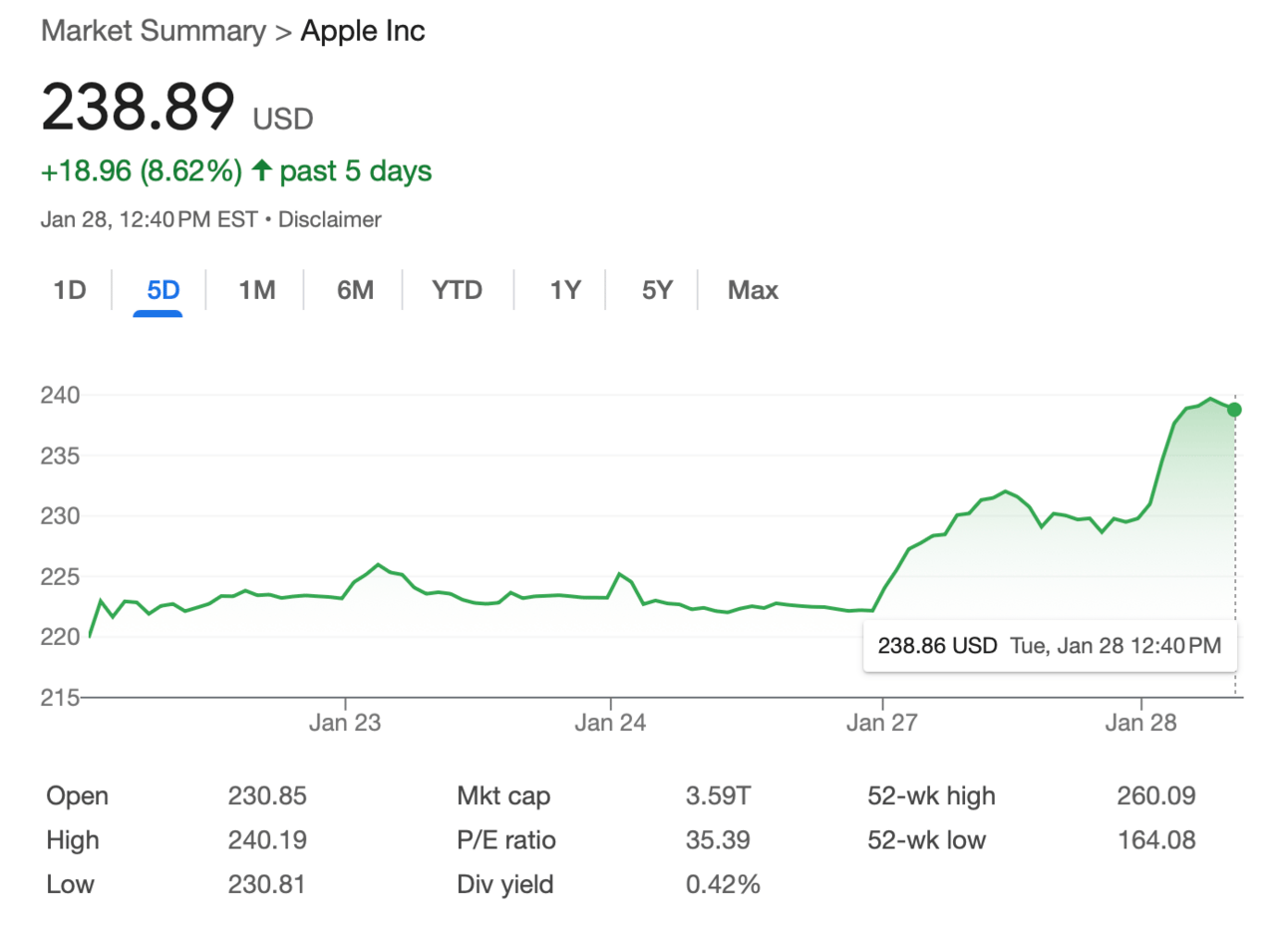

In comparison to other hardware, Apple has a huge edge, and this is somewhat reflected in their stock valuation.

And the EU? Really really has to catch up, but not much insight.🚨

Again, I love Europe, I love Germany, but the memes about the EU make me crack up. 🤣 … thogh, there is some truth to it.

Sources on DeepSeek:

- paper

- webpage

- model weights

I hope you enjoyed it.

Happy weekend!

Martin 🙇

I recommend:

Beehiiv if you write newsletters.

Superhuman if you write a lot of emails.

Cursor if you code a lot.

Bolt.new for full-stack development.

Follow me on X.com.

AI for your org: We build custom AI solutions for half the market price and time (building with AI Agents). Contact us to know more.