Today, I share an important consideration when launching a product—an eye-opener!

Further, learn how to implement your AI locally with high performance. This is a bit more technical, but if you understand how to tune it, you are an ASSET!

(DOWNLOAD the full code base below.) 😉

Plus, OpenAI’s confidence to build AGI this year.

Writer RAG tool: build production-ready RAG apps in minutes

Writer RAG Tool: build production-ready RAG apps in minutes with simple API calls.

Knowledge Graph integration for intelligent data retrieval and AI-powered interactions.

Streamlined full-stack platform eliminates complex setups for scalable, accurate AI workflows.

(✨ If you don’t want ads like these, Premium is the solution. It is like you are buying me a Starbucks Iced Honey Apple Almondmilk Flat White a month.)

When launching your product, consider this…

Last time, I shared “My 7-step blueprint for building projects” along the rapid development of AGIpath.net. Interesting: I still didn’t really launch and already have almost 200 visitors + 1k clicks.

However, a critical consideration before you launch: be aware of how many users your web app can handle. If it is too low, you must upscale your resources (e.g., choosing a larger dyno size on Heroku—upscaling is always easier than downscaling 😄).

Example Heroku.

But how do you know how many visitors and actions it can handle?

With the k6 package!

1. Install k6: brew install k6

2. Create a short test script (in stages, you can simulate visitors):

import http from 'k6/http';

import { check, sleep } from 'k6';

export const options = {

stages: [

{ duration: '1m', target: 100 }, // Ramp up to 100 users over 1 minute

{ duration: '2m', target: 30000 }, // Sustain 30,000 users for 2 minutes

{ duration: '1m', target: 0 }, // Ramp down to 0 users

],

};

export default function () {

const url = 'https://agipath.net/';

const res = http.get(url);

check(res, {

'status is 200': (r) => r.status === 200,

'response time < 500ms': (r) => r.timings.duration < 500,

});

// Add a small pause between iterations

sleep(1);

}3. Run the test via terminal: k6 run load_test.js

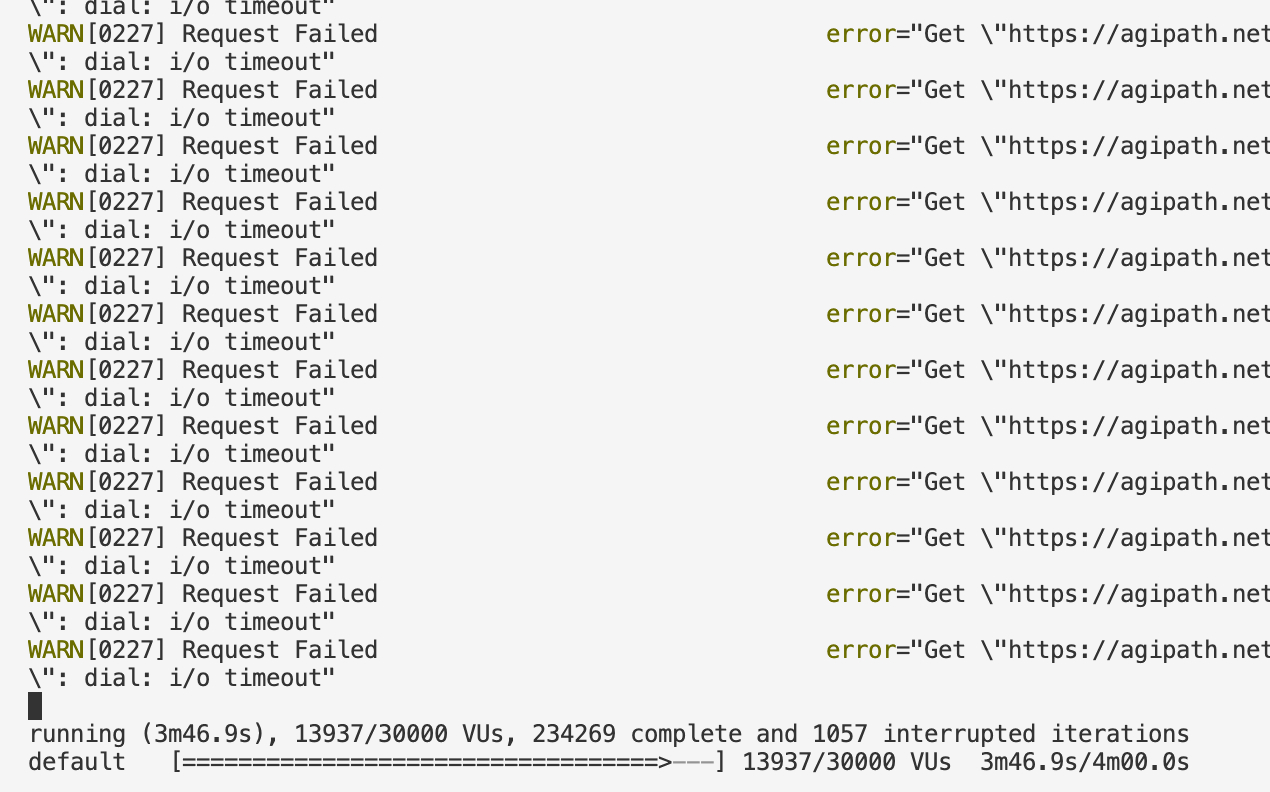

As it runs, it simulates how long your webpage works until it breaks.

And, for me, it broke at some point. But that’s the whole point.

AGIpath.net could handle 1,200 requests/sec. If the system can process ~1,200 requests per second successfully before failures dominate, and assuming an average visitor makes ~2 requests per second (loading images, clicking a button, HTML, CSS, or JS requests, etc.), the system might handle:

For me, 600 concurrent users are good enough. At the end, it’s a book page—how viral can it get? Also, hypothetically, if people join the page in an evenly distributed fashion, the page can serve 2.1 million users in 1 hour. Definitely no scaling needed.

Easiest way to deploy an AI/LLM locally, and how to make a local AI very performant!

I am on a mission. Soon, I will need the best-performing AI setup there is. (I’m building an AI product that answers vocal requests by processing and analyzing 100k pages efficiently.) For this, I need an optimal (regarding the hardware it is given) working LLM.

I took the first step and would like to share my findings over the upcoming weeks.

First of all, by downloading Ollama and choosing the model you want to deploy, you get quite well-optimized LLM performance out of the box.

Just do the following:

Install Ollama. → https://ollama.com

Choose the right model from the model library and run in terminal: ollama run llama3.2 (This is a small language model, SML, with 3B parameters—size matters most in performance.)

With this, I get 50 tokens/sec on my MacBook M3 Max with 36GB Memory. That’s already great!

However, if you hack it right, you can get much more—but also mess things up.

What I tried out - Learnings!

The first step is to understand what hardware I’m working with because, for best performance, we want to run the AI directly on our laptop.

This is called the Bare-Metal.

I have several GPUs that we want to use.

There’s a great package called llama.cpp (.cpp means it is written in C++, which is great for implementing in the most resource-efficient ways).

So, we do the following steps:

Obtain llama.cpp - Clone the GitHub repository:

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cppInstall prerequisites (e.g., Xcode command-line tools).

Build with Metal Acceleration - In the llama.cpp directory, run:

make clean

LLAMA_METAL=1 makeConvert Model to llama.cpp Format - Use Meta’s original weights (or the official 3.2 release if that exists) and convert to GGUF. Example script:

python3 convert.py --quantize f16 --model <path_to_downloaded_llama32_weights> ... Ensure the resulting files have .gguf (or .bin for older versions).

Place Model Files - Put the .gguf files into ./models/llama32-8B/ within llama.cpp.

Run the Model - Use the example command, adjusting the model path and settings:

./main -m ./models/llama32-8B/your-converted-model.gguf \

-n 256 \

--threads 12 \

--ctx_size 2048Add --gpu-layers <N> if you want more layers on the GPU. (Which we need to do!)

PRO TIP: I had my Cursor AI Agent do all of this for me—it was seamless.

Btw, llama.cpp abstracts existing GPUs into GPU layers.

When I ran it on 1 GPU layer, the tokens/sec was 45—worse than just setting things up with Ollama in 1 minute.

How do you determine optimal GPU layers?

Max GPU layers might not be optimal for LLM performance due to communication overhead between the layers.

My Cursor AI Agent handled the heavy lifting:

The llama.cpp package determines the “recommended max working set size,” which specifies how many GPU layers can be utilized.

In this case, it’s 28.

What is the optimal number of GPU layers?

I built a test script to run a standardized benchmark, making the GPU layers comparable.

It turns out that running on bare metal makes the PC run hot, so I put my laptop outside at -2°C 😄.

The testing results

28 GPU layers are the way to go! With that setup alone, we reached 77.67 tokens/ sec.

For Premium subscribers: Download the full scripts/ code here (you need to be logged in to see the download option):

From here, we have many more performance experiments to run.

Model Optimization

Try different quantization levels (Q4_K vs Q3_K vs Q2_K)

✅ Test different numbers of GPU layers (-ngl 1 vs higher values)

Compilation Optimization

✅ Rebuild llama.cpp with optimized flags

👎 Enable OpenMP support

👎 Add BLAS optimizations for Apple Silicon -> 74.4/tokens/ sec.

Parameter Tuning

Optimize thread count

Adjust context size

Fine-tune batch size settings

You see I have also successfully rebuild the model with optimized flags.

These were my settings, pushing the tokens/sec not significantly, but consistently a bit to 78.37 tokens/sec:

ARM-specific optimizations:

-mcpu=apple-m1-mtune=native

Vectorization flags:

-fvectorize-fslp-vectorize

Math handling:

-fno-finite-math-only

Loop optimizations:

-funroll-loops

The respective terminal command:

mkdir build && cd build && cmake .. -DLLAMA_METAL=ON -DCMAKE_C_FLAGS="-O3 -mcpu=apple-m1 -mtune=native -fvectorize -fslp-vectorize -fno-finite-math-only -funroll-loops" -DCMAKE_CXX_FLAGS="-O3 -mcpu=apple-m1 -mtune=native -fvectorize -fslp-vectorize -fno-finite-math-only -funroll-loops" && cmake --build . --config Release && cd ../.. && source venv/bin/activate && python benchmark_llama.pyOpenMP Support and BLAS optimizations didn’t help me. In fact, they worsened the performance.

OpenMP is an API for parallel programming on multi-core processors using shared memory. Pushed my performance down to 75 tokens/ sec.

BLAS (Basic Linear Algebra Subprograms) is a library standard for performing basic vector and matrix operations, widely used in high-performance computing. Pushed my performance down to 74.6 tokens/ sec.

Next, I will have fun with parameter tuning and quantization. Goal is to get it to above 100 tokens/ sec.

BIG THINGS are coming!

Hire an AI BDR & Get Qualified Meetings On Autopilot

Outbound requires hours of manual work.

Hire Ava who automates your entire outbound demand generation process, including:

Intent-Driven Lead Discovery Across Dozens of Sources

High Quality Emails with Human-Level Personalization

Follow-Up Management

Email Deliverability Management

(✨ If you don’t want ads like these, Premium is the solution. It is like you are buying me a Starbucks Iced Honey Apple Almondmilk Flat White a month.)

After o3, OpenAI is “confident” that they know how to build AGI

(Source)

I read Sam Altman’s reflection letter, and 2 sentences stood out.

“We are now confident we know how to build AGI as we have traditionally understood it. We believe that, in 2025, we may see the first AI agents ‘join the workforce’ and materially change the output of companies.”

It got leaked that OpenAI will launch their Operator - a true AI agent.

“Superintelligent tools could massively accelerate scientific discovery and innovation well beyond what we are capable of doing on our own, and in turn massively increase abundance and prosperity.”

As the Japanese firm Sakana AI already has successfully shown, and o3 definitely proved, AI will push scientific boundaries. It will be superintelligent and probably radically push cutting-edge research in all fields.

Currently the CES is going on in Las Vegas. The products I have seen resulting from this is crazy. I might cover a thing or two of CES next time.

Best,

Martin 🙇

I recommend:

Beehiiv if you write newsletters.

Superhuman if you write a lot of emails.

Cursor if you code a lot.

Bolt.new for full-stack development.

Follow me on X.com.

AI for your org: We build custom AI solutions for half the market price and time (building with AI Agents). Contact us to know more.