Hey {{first_name}},

2025 is the year of AI agents. And as they are powered by LLMs, the better LLMs do the better are the agents. It is about their performance, but also features like context window length, latency, and hallucination rates.

Today on the docket:

LLMs: Alibaba’s new QwQ-32B establishes new standards

AI Agents: coding agent in Cursor connects to data efficiently via MCP servers (Anthropic’s protocol), with Composio streamlining the process.

And at the bottom our productivity hack for creating killer slides fast. ⬇️⬇️

Alibaba’s QwQ-32B establishes new standards for AI models

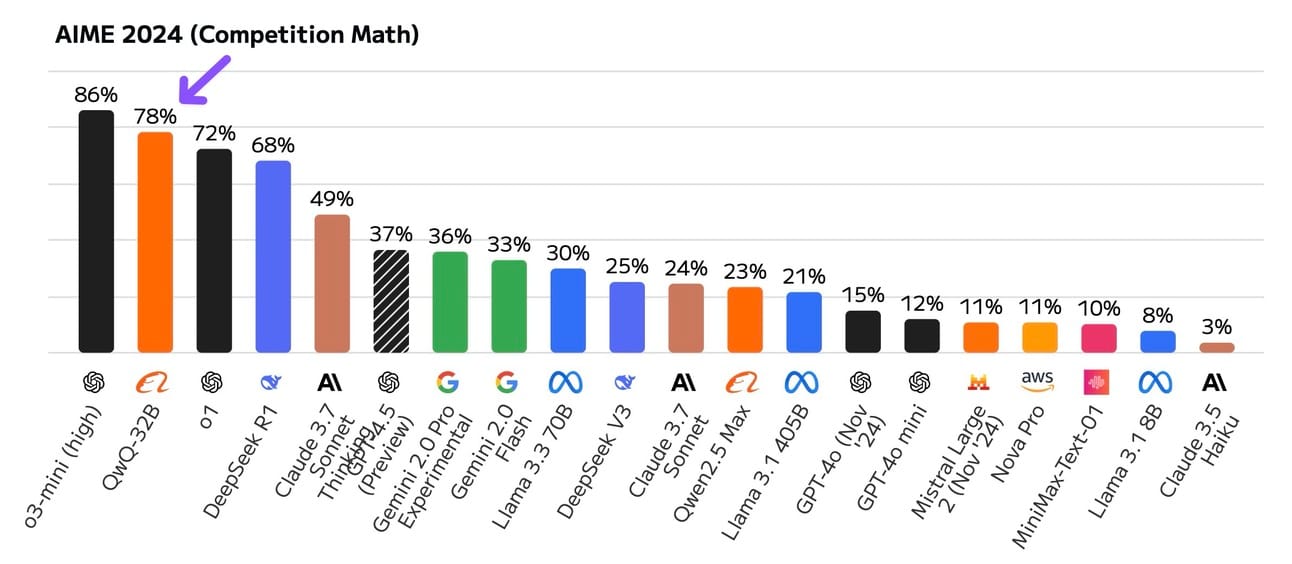

The Chinese company Alibaba has released a reasoning LLM with the slightly cryptic name QwQ-32B. The latter part stands for its number of parameters: 32 billion. For comparison DeepSeek-R1 has 671 billion (20x).

This is matters a big deal, because the more parameters the higher compute costs and latency.

This relatively small model outperforms very large models significantly in math (AIME benchmark). It is ahead of R1, and OAI’s o1.

In other benchmarks it performs great as well.

It is open source: download it and work with it (65GB) here:

ModelScope: https://modelscope.cn/models/Qwen/QwQ-32B

Qwen Chat: https://chat.qwen.ai

With QwQ, we see another 90% price cut in word generation.

Meanwhile at Meta

Llama 4 was set to be released in January but R1 made them to rethink/ rework their model.

With Llama 4 they have to release something stronger than QwQ-32b. That’s a must. But in the meantime DeepSeek announced that R2 is coming in May 2025. 🙈

Turbulent times ahead, but all good for the consumer side of it.

It’ll be cheaper and more performant.

Composio supports MCP now

When working with tools like Cursor AI, Windsurf AI, or Anthropic you want to connect it reliably to various data. MCP is a protocol to streamline that process.

You no longer have to struggle with setting up MCP servers or worry about server reliability, availability, and authentication complexity.

Composio has built the largest source of fully managed MCP servers, with complete auth support.

What they have to offer

Fully managed MCP servers for 100+ apps like Linear, Slack, Notion, Calendly, etc.

Managed Auth (OAuth, ApiKey, Basic) for applications

Improved tool calling accuracy

That is a big deal, as it saves a lot of time.

Productivity hack with Grok-3 & Gamma

Subscribe to Premium to See the Rest

Upgrade to Premium for exclusive demos, valuable insights, and an ad-free experience!

Get Exclusive InsightsA subscription gets you:

- ✅ Full access to 100% of all content.

- ✅ Exclusive DEMOs, reports, and other premium content.

- ✅ Ad-free experience.