100 seconds, packed with extraordinary tech advancements. ✅

Tech Deep Dive: Now you can run up to 100B LLMs locally on your CPU (No GPUs!) and get 5-7 words/second

Grok 2 unparalleled vision capabilities

Latest on humanoid robots

Learn best practices regarding building products/ code with AI!

Based AI Agends → on blockchain

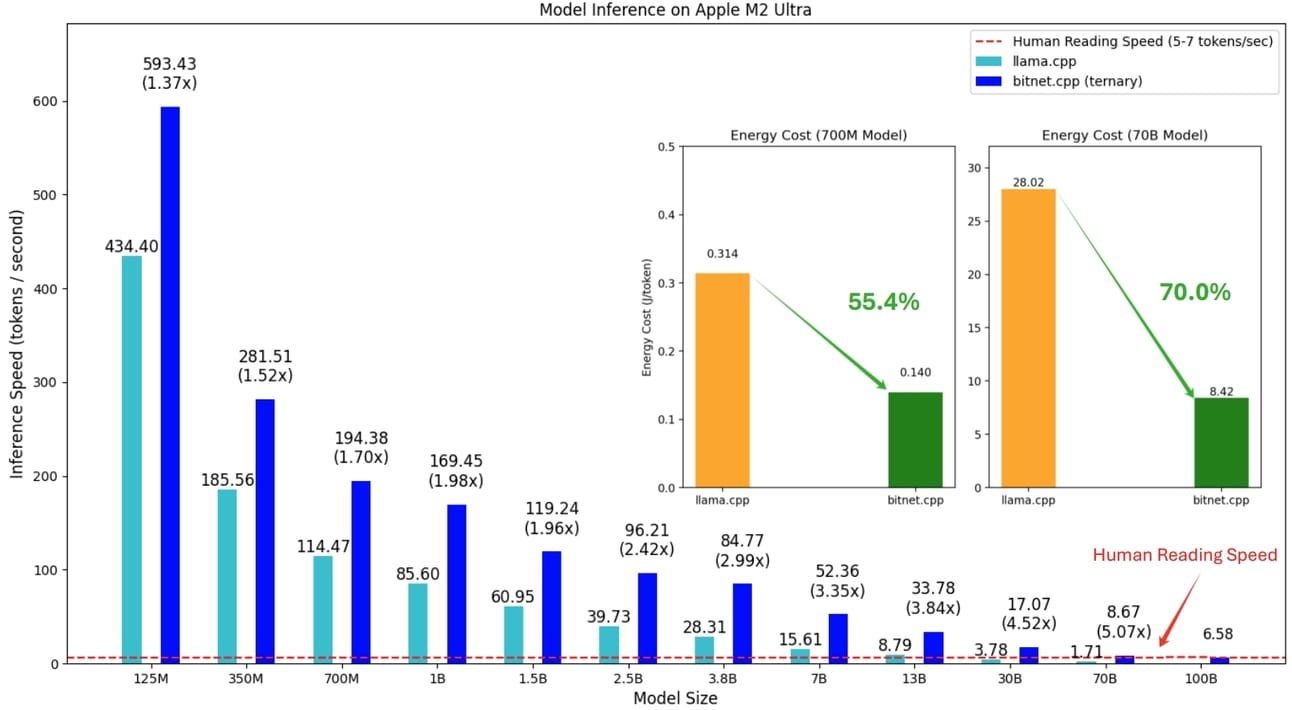

Now you can run up to 100B LLMs locally on your CPU (No GPUs!) and get 5-7 words/second

Microsoft has open-sourced BitNet, an ultra-efficient LLM framework that offers groundbreaking performance by reducing data requirements.

It’s slightly technical, but its concept is not as difficult. Let me explain.

Traditional approaches use at least 16 bits to store the trained parameters, which are decimal numbers, such as 6.5.

Staying with the example, 6.5 would store 1 bit for the sign (+/ -), 5 bits for the exponent (order of magnitude), and 10 bits for the number's actual value. 16 bits in total.

BitNet, the 1-bit framework, is much simpler and works, using only 1 bit. It limits each trained parameter to just three values: -1, 0, or 1. This means no other values are possible. There is no 6.5.

Why would they do this?

BitNet runs 4.1 times faster and has 8.9 times the throughput of models using the 16-bit representation. Period. Basta.

By using only integer addition instead of complex multiplications, BitNet is not only optimized to be faster but also requires significantly less memory.

It is genius!

And this is huge because …

…, with this, we can do more in a shorter time. Which is vital in AI’s progression. Look at reasoning models such as o1 (Claude 3.5 Opus might one be as well). You want it to process thousands of ideas in under a second—perfect!

Further, this results in lower energy usage and reduced infrastructure costs. This is especially helpful for edge and mobile devices where resources are limited as well as real-time applications like we see with OpenAI’s Realtime API. (I wrote it and how you can apply it.)

How to get LLMs to make them run on your laptop with 1-bit BitNet? Here is the GitHub Repo + steps of how to do it.

⚠ If you absolutely want me to do a video on how to implement it, let me know in the comments or reply to this email.

Be an everyday genius 🧑🔬

Learning a little every day can have a huge impact—especially if you're learning on Brilliant. Explore thousands of bite-sized, interactive lessons on everything from math and data analysis to programming, AI, and beyond.

xAI’s Grok 2 (the AI Model) now has vision capabilities that are incredibly in-detail (my demo)

I have worked with Anthropic, Google, OpenAI, and other top-notch AI companies, but xAI’s vision capabilities are unparalleled. Look for yourself in my demo below.

Latest updates on humanoid robots 🤖

Clone has built Torso. An upper-body robot that is only actuated with artificial muscles. With that, robot anatomy is getting closer to human-like biology.

Finally, a humanoid robot with a natural, human-like walking gait. Chinese company EngineAI just unveiled their life-size general-purpose humanoid SE01.

Learn best practices regarding building products/ code with AI!

The AI Summit Seoul has been at the forefront of tech for years.

Only signal, no noise.

This year, I am happy to share GenerativeAI.net's self-developed framework for using current AI tools most effectively in product development.

Additionally, I'll host a hands-on workshop this year. Participate, learn frameworks, build your agent, and enjoy working with an agent at your hand.

AI Agents + Blockchain = Based Agent ⛓

Create AI Agents with full on-chain functionality in less than 3 minutes.

The era of Autonomous Onchain Agents is here, built with the Coinbase SDK, OpenAI, and Replit.

To get started, you need an API key from https://cdp.coinbase.com, a key from OpenAI, and to fork the Replit template. It couldn't be easier to start adding whatever functionality you want to these agents.

Find the how-to here: https://github.com/murrlincoln/Based-Agent/tree/main/Based-Agent

I can’t wait to see what you build. 🙂

⚠ If you want me to demo how to implement it, let me know in the comments or reply to this email.

That’s a wrap! I hope you enjoyed it.

Martin

Do you write newsletters? I use Beehiiv and highly recommend it.

AI for your org: We build custom AI solutions half the market price, and time (building w/ AI Agents). Contact us to know more.

Would you like to sponsor a post?