OpenAI released o1 (preview and mini), and it is very strong in reasoning.

Also, we’ve learned the basics of building products fast with AI. Now it's time to roll up our sleeves and build something that matters.

So—I’ll be sharing my product-building journey with you. → Day 1/x.

Lastly, batch 1 of Everyone can Code!✨ is about to shut down for good. You’ve got exactly 3 hours left after this episode drops (noon CET) to jump in. After that, it’s gone. Indefinitely.

Reading time is 2:30 min. Let’s go!

OpenAI o1 - The Best Model ever especially in Reasoning

(Source)

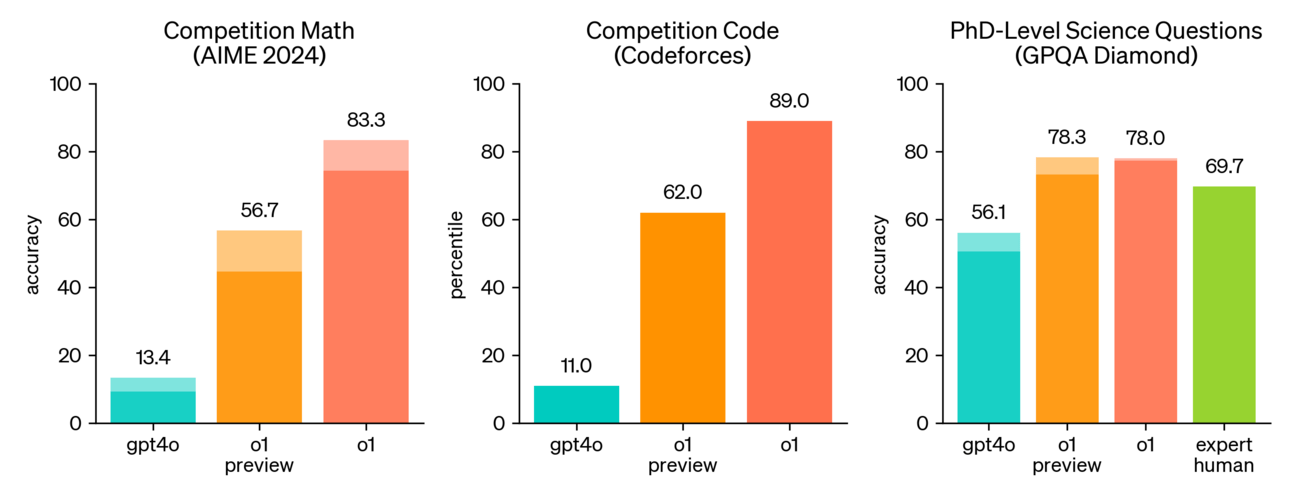

OpenAI o1 ranks in the 89th percentile on Codeforces programming, among the top 500 U.S. students in the AIME math qualifier. And to top it off, it outperforms actual PhDs on GPQA science benchmarks in physics, biology, and chemistry.

It is that good.

Especially interesting to me how it crushes GPT-4o in math and code.

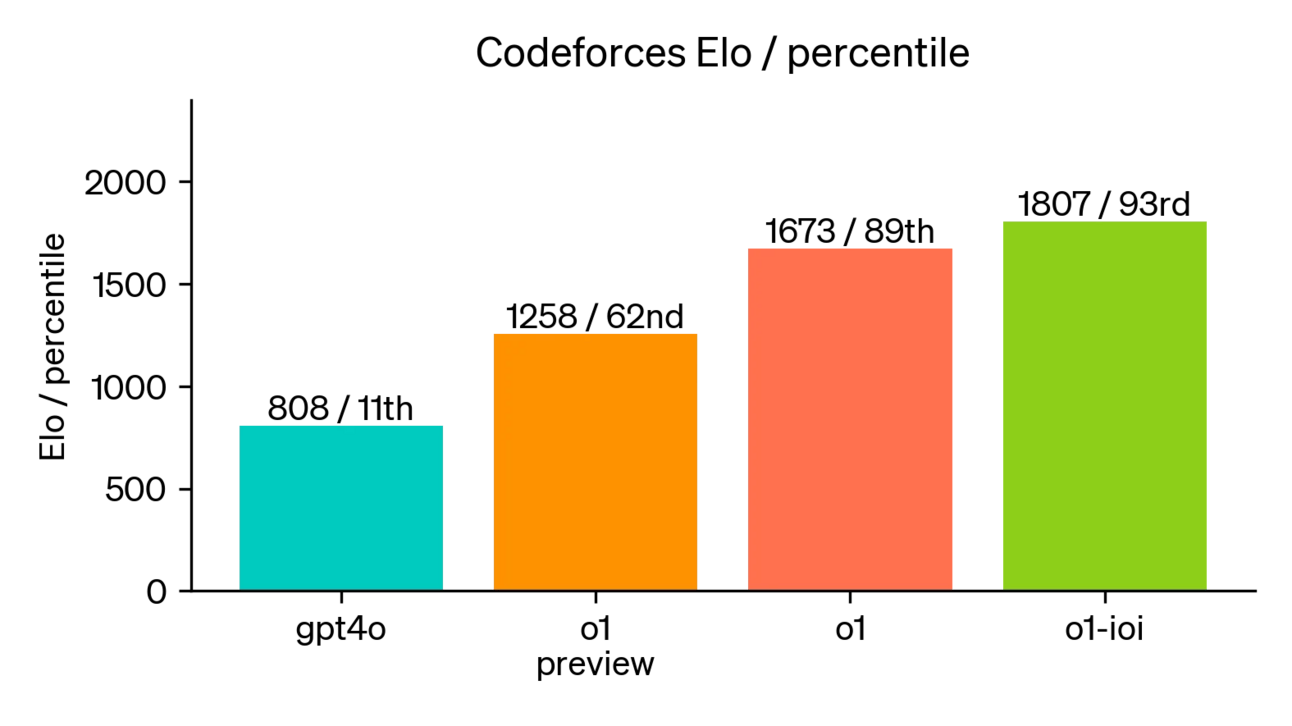

For code, OpenAI has still 2 other models up their sleeve. o1-ioi is focused on International Olympiad in Informatics (IOI).

OpenAI trained the model for the 2024 International Olympiad in Informatics, scoring 213 points and ranking in the 49th percentile. It scored 362.14, surpassing the gold medal threshold, and achieved an Elo rating of 1807 on Codeforces, outperforming 93% of competitors.

It thinks long and deep, applying a chain-of-thought (CoT) reasoning in the background.

How it exactly works, will remain unknown to the public. OpenAI-style. 🤷

I am extensively trying it out. I’ll probably spend part of my Weekend trying to solve unsolved math problems - as I have already pointed out in this episode.

Day 1 of building products with AI - preparing the first project

(A mini-series very transparently documenting how I build products with AI, sharing the wins and screw-ups.)

When building a product solo with an AI co-developer, avoid unnecessary complexity. Complexity creeps in quickly, especially when a project scales.

Setup of mind

If you want to stay laser-focused on your product’s mission with near-zero entropy, these are the must-ingests:

Elon Musk’s 5-step process for building products; watch at least 6 times

a16z’s post on how AI will turn capital into labor

Paul Graham’s Founder Mode

How to guarantee your product idea/ feature sells

The Product Manifesto

With that in mind, I wrote down the guidelines for building stuff going forward. I call it the Product Manifesto. Keeping it simple (KIS), the first version:

Build lean. No extra features that don’t provide value.

Build and test with real market.

Iterate fast.

Build features A, B, and C, test with the market, and follow the strongest signals.

Build cheaply. In 90% of the cases, there is a no-cost option. Drive for an insane profit margin.

Automated 100% of the project. Use CI/CD, cronjobs, free services, and more.

Use ahref and make sure your page has at least a score of 80.

Connect it to GenerativeAI.net. They have to mutually endorse each other.

Share progress with Newsletter, YouTube, X.com, and perhaps TikTok. (That is my marketing department.)

To the point of sharing progress, what is your preferred platform?

I'll keep it short and sweet. Next time, I'll dive into what my next project is and why I chose it. Stay tuned.

NotebookLM now lets you listen to a conversation about your sources

Google's new Audio Overviews in NotebookLM turn notes, PDFs, and Docs into AI-generated audio discussions. Using Gemini 1.5, it processes up to 50 sources (25 million words total) and is ideal for auditory learners, especially for academic papers and presentations.

I tried it, and it is craaaazy goood! 🤯

I pasted in the text of Day 1 of building products with AI - preparing the first project, and this is the result (I hope you can hear it, sometimes it is buggy):

Newly released: Mistral’s Pixtral → Open-Source!

Mistral AI's Pixtral 12B is a multimodal model combining language and vision processing, supporting image resolutions up to 1024x1024, handling tasks like image generation and manipulation, and is available under an open-source Apache 2.0 license with 12 billion parameters.

That’s a wrap.

Have a good weekend!

-Martin

Would you like to sponsor a post? —> www.passionfroot.me/ai

Spread the word! Referral program.

Our webpage: https://generativeai.net