Friends,

Quick pulse-check on the week: AI is escaping the chat box—into wet labs, into datacenters, and into your default apps. Here’s what matters.

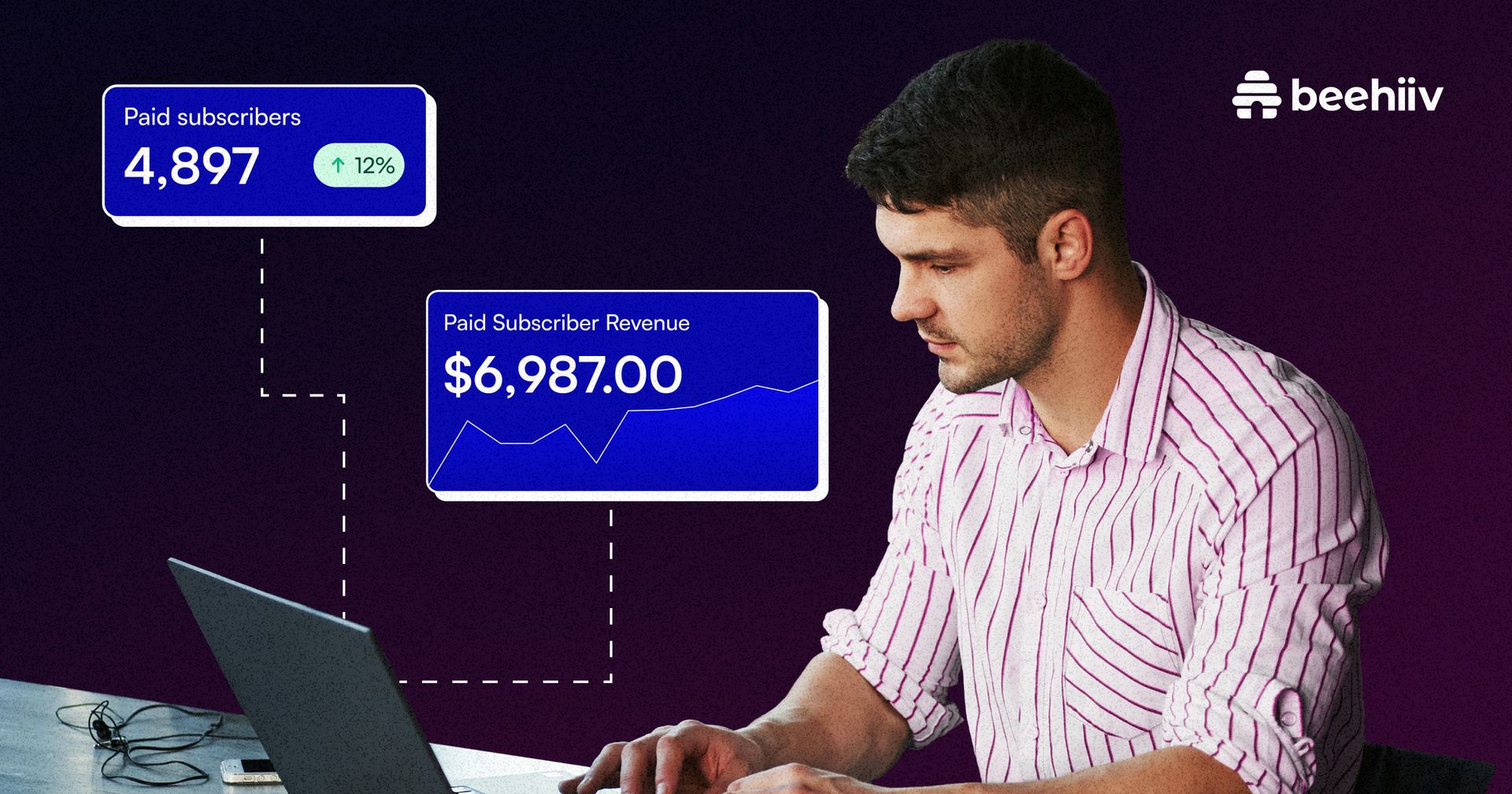

This newsletter you couldn’t wait to open? It runs on beehiiv — the absolute best platform for email newsletters.

Our editor makes your content look like Picasso in the inbox. Your website? Beautiful and ready to capture subscribers on day one.

And when it’s time to monetize, you don’t need to duct-tape a dozen tools together. Paid subscriptions, referrals, and a (super easy-to-use) global ad network — it’s all built in.

beehiiv isn’t just the best choice. It’s the only choice that makes sense.

(✨ If you don’t want ads like these, Premium is the solution. )

GPT-5 Ran a Wet Lab (Seriously)

OpenAI + Red Queen Bio put GPT-5 in charge of a real experimental loop and it boosted molecular cloning efficiency by 79×.

GPT-5 iterated on a cloning workflow across multiple rounds; humans/robots & machines executed, GPT-5 steered.

Result: 79× more sequence-verified clones from the same input DNA vs baseline.

Key finding AI had: a new enzyme mechanism (RecA + gp32), packaged as a new approach.

Why it matters: this is what “AI for science” looks like when it touches reality—tight loops, real data, real gains.

(Source)

100–200 MW for Robot Training: Tesla’s Cortex 2

The physical footprint keeps growing: Tesla has a new permit trail around Cortex 2, a datacenter-scale cluster tied to training workloads (FSD + increasingly, Optimus).

Filings point to a 100–200 MW-class facility (fire detection/alarm work scoped for that scale).

That’s “tens of thousands of GPUs + cooling + storage” territory.

Big picture: progress isn’t just better models—it’s brute-force infrastructure, built fast.

(Source)

Gemini 3 Flash: Pro-ish Intelligence at Flash Speed

Google launched Gemini 3 Flash—the speed/cost monster that’s meant to be the default workhorse. It is already in Cursor.

Compared to Gemini 3 Pro, Flash is positioned as:

~3× faster latency

~¼ the cost (and higher rate limits)

Stronger agentic coding: 78% SWE-bench Verified

Very strong multimodal (text/image/video/audio), close to Pro

Trade-off:

Slightly lower peak intelligence vs Pro (e.g., 33.7% vs 37.5% on Humanity’s Last Exam, no tools).

Pro still wins on hardest math, deepest reasoning, and nastiest code.

Flash is now the default/free model in the Gemini app.

GPT Image 1.5: OpenAI’s New Flagship Image Model

OpenAI released GPT-Image-1.5 (a.k.a. the new ChatGPT Images).

Up to 4× faster generation

More reliable, surgical edits (change what you ask—keep the rest)

Better instruction following + denser text rendering

Also ~20% cheaper than the prior image model in the API

You’ll see the comparison in the graph below (incl. vs Nano Banana Pro).

Generated with GPT-Image-1.5 (Infographic of LM Arena Board):

OpenAI Goes Geopolitical + Everyone Doubles Down on Compute

OpenAI hired former UK Chancellor George Osborne to lead “OpenAI for Countries”—basically “Stargate for Countries,” exporting an “AI stack” via government partnerships.

And the meta-trend is still the same: compute wins. Greg Brockman’s blunt take: it’s what keeps working for intelligence.

That's all for this week!

Happy Building!

🙇Martin

I recommend:

Beehiiv if you write newsletters.

Superhuman if you write a lot of emails.

Cursor if you code a lot.

Follow me on X.com.

AI for your org: We build custom AI solutions for half the market price and time (building with AI Agents). Contact us to know more.